In a typical website, some element of static reference data exists in the system. This static reference data might never change or might change infrequently. Rather than continually querying for the same data from the database, storing the data in a cache can provide great performance benefits. This article discusses how to store data in the in-process cache and the distributed cache.

This article is based on Azure in Action, published in October 2010. It is being reproduced here by permission from Manning Publications. Manning early access books and ebooks are sold exclusively through Manning. Visit the book’s page for more information.

A cache is a temporary, in-memory store that contains duplicated data populated from a persisted backing store, such as a database. Because the cache is an in-memory data store, retrieving data from the cache is fast (compared with database retrieval). Since a cache is an in-memory temporary store, if the host process or underlying hardware dies, the cached data is lost and the cache needs to be rebuilt from its persistent store.

Never rely on the data stored in a cache. You should always populate cache data from a persisted storage medium such as Table storage, which allows you to persist back to that medium if the data isn’t present in the cache.

NOTE: For small sets of static reference data, a copy of the cached data resides on each server instance. Because the data resides on the actual server, there’s no latency with cross-server roundtrips, resulting in the fastest possible response time.

In most systems, two layers of cache are used: the in-process cache and the distributed cache. In this article, we’ll look at both.

Let’s take a look at the first and simplest type of cache you can have in Windows Azure, which is the ASP.NET in-process cache.

In-process caching with the ASP.NET cache

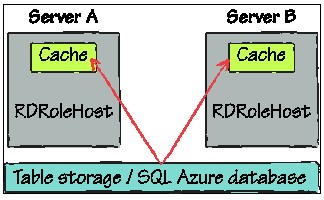

As of the PDC09 release, the only caching technology available to Windows Azure web roles is the built-in ASP.NET cache, which is an in-process individual-server cache. Figure 1 shows how the cache correlates with your web role instances within Windows Azure.

Figure 1 – The ASP.NET cache; notice that each server maintains its own copy of the cache.

Figure 1 shows that both server A and server B maintain their caches of the data that’s been retrieved from the data store (either from the Table storage or from the SQL Azure database). Although there’s some duplication of data, the performance gains make using this cache worthwhile.

In-process memory cache

You should also notice in figure 1 that the default ASP.NET cache is an individual-server cache that’s tied to the web server worker process (WaWebHost). In Windows Azure, any data you cache is held in the WaWebHost process memory space. If you were to kill the WaWebHost process, the cache would be destroyed and would need to be repopulated as part of the process restart.

Because the VM has a maximum of 1 GB of memory available to the server, you should keep your server cache as lean as possible.

Although in-memory caching is suitable for static data, it’s not so useful when you need to cache data across multiple load-balanced web servers. To make that scenario possible, we need to turn to a distributed cache such as Memcached.

Distributed caching with Memcached

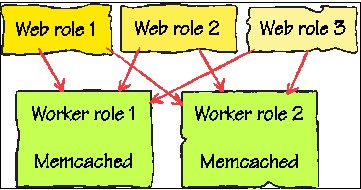

Memcached is an open-source caching provider that was originally developed for the blogging site Live Journal. Essentially, it’s a big hash table that you can distribute across multiple servers. Figure 2 shows three Windows Azure web roles accessing data from a cache hosted in two Windows Azure worker roles.

Figure 2 – Three web roles accessing data stored in two worker role instances of Memcached. With Windows Azure, your web roles can communicate directly with worker roles, as shown here.

Microsoft has developed a solution accelerator that can serve as an example of how to use Memcached in Windows Azure. This accelerator contains a sample website and the worker roles that host Memcached. You can download this accelerator from http://code.msdn.microsoft.com/winazurememcached. Be aware that memcached.exe isn’t included in the download but can be found online.

Hosting Memcached

Although we’re using a worker role to host your cache, you could also host Memcached in your web role (saves a bit of cash).

To get started with the solution accelerator, you just need to download the code and follow the instructions to build the solution. Although we won’t go through the downloaded sample, let’s take a peek at how you store and retrieve data using the accelerator.

Setting data in the cache

If you want to store some data in Memcached, you can use the following code:

AzureMemcached.Client.Store(Enyim.Caching.

[CA]Memcached.StoreMode.Set, “myKey”, “Hello World”);

In this example, the value “Hello World” is stored against the key “myKey”. Now that you have data stored, let’s take a look at how you can get it back (regardless of which web role you’re load balanced to).

Retrieving data from the cache

Retrieving data from the cache is pretty simple. The following code will retrieve the contents of the cache using the AzureMemcached library:

var myData = AzureMemcached.Client.Get(“myKey”));

In this example, the value “Hello World” that you set earlier for the key “myKey” would be returned.

Although our Memcached example is cool, you’ll notice that we’re not using the ASP.NET Cache object to access and store the data. The reason for this is that, unlike the Session object, the Cache object (in .NET Framework 3.5SP1, 3.5, or 2.0) doesn’t use the provider factory model; the Cache object can be used only in conjunction with the ASP.NET cache provider.

Using ASP.NET 4.0, you’ll be able to specify a cache provider other than the standard ASP.NET in-memory cache. Although this feature was introduced to support Microsoft’s new distributed cache product, Windows Server AppFabric Caching (which was code-named Velocity), it can be used to support other cache providers, including Memcached.